Migrating to Cloudflare Pages

Moving from Cloudflare as a proxy to Cloudflare as my hosting platform.

TL; DR

I recently decided to evaluate Cloudflare as a static site hosting provider. This post covers my findings and migration from AWS Amplify.

Amplify Overview

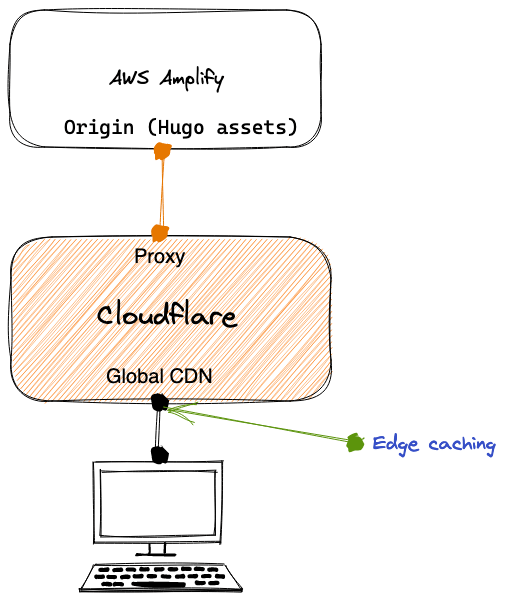

The Amplify configuration is unchanged from the previous migration post. The website is statically generated content built with Hugo (noted as Hugo assets in the diagram). Cloudflare provides a proxy to save on bandwidth and provide a CDN function.

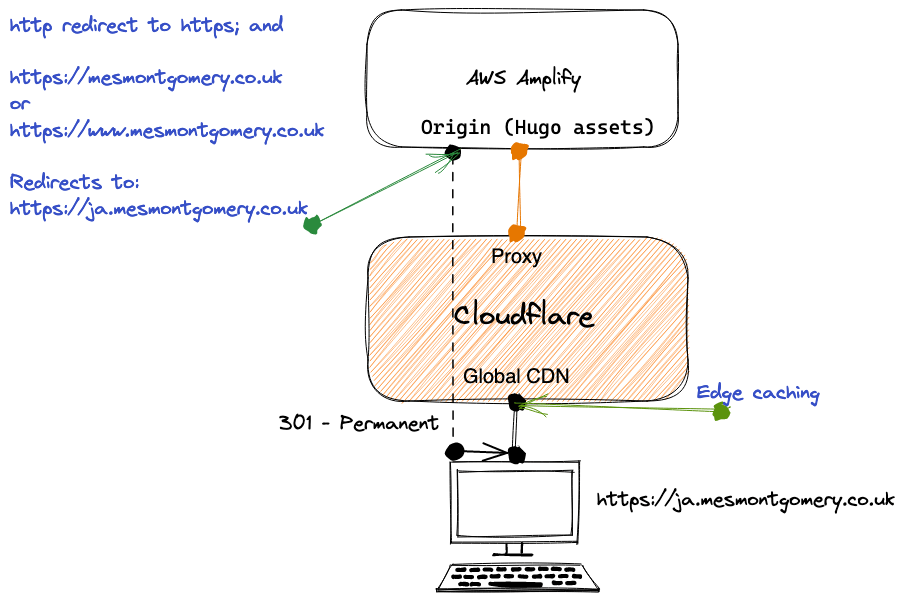

Besides providing hosting for ja.mesmontgomery.co.uk, Amplify provides several redirects.

Cloudflare Pages

Cloudflare pitch Pages in this blog post. The key benefit for me was the migration of the origin to the edge itself.

With Pages, your site is deployed directly to our edge, milliseconds away from customers, and at a global scale.

A successful transition required:

- Git-based deployment

- HTTP to HTTPS redirection

- Alias redirection

Git deployment

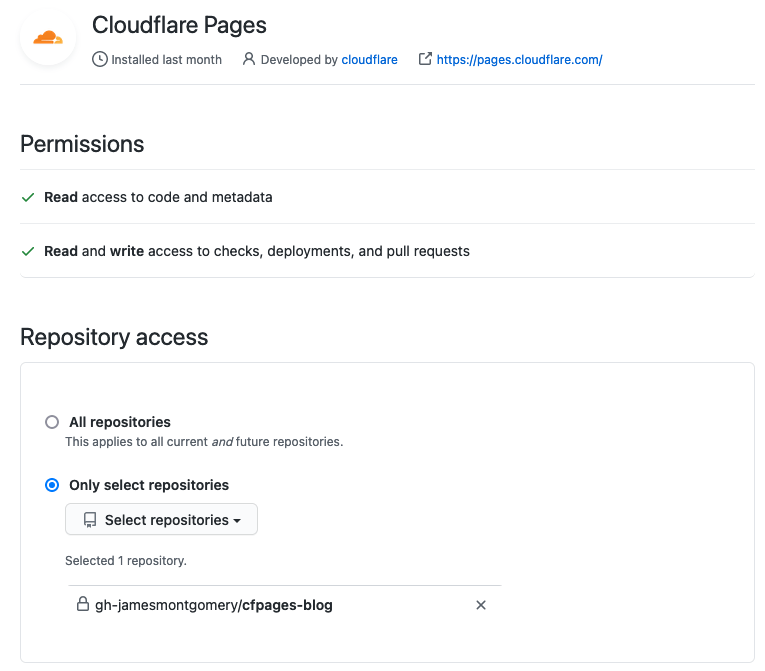

Cloudflare Pages support Github only at the time of writing. Using GitHub required a change on my part. However, I liked the ability to use a private repository as the source without granting access to all:

Redirection

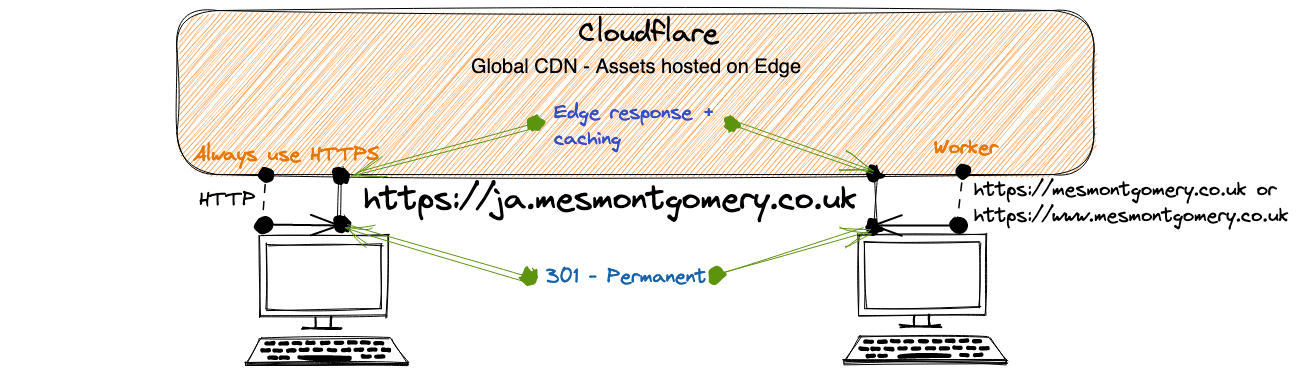

Replacing the redirection capabilities in AWS Amplify with analogues in Cloudflare is a straightforward task with page rules. The settings Always use HTTPS and Forwarding URL would accomplish the task.

However, I am working within the constraints of the free plan. I have already used my allowance of page rules. Below I cover my solution to working inside these limits.

HTTP to HTTPS redirection

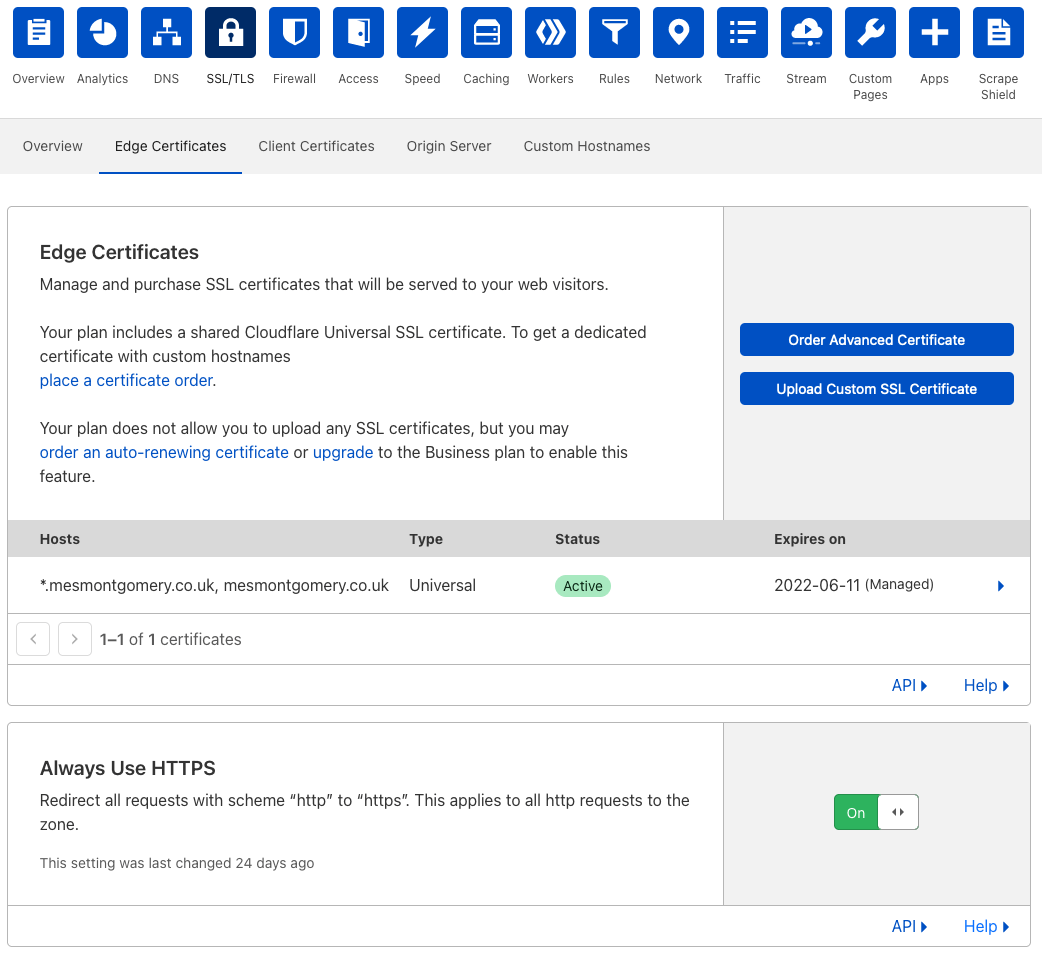

I don’t serve any HTTP traffic. Therefore one way of accomplishing this without a page rule is via the zone global setting of Always use HTTPS in the SSL/TLS app.

Alias redirection

It is possible to provide redirection using Cloudflare Workers. I used their reference template to accomplish this. The code is below:

const base = "https://ja.mesmontgomery.co.uk"

const statusCode = 301

async function handleRequest(request) {

const url = new URL(request.url)

const { pathname, search } = url

const destinationURL = base + pathname + search

return Response.redirect(destinationURL, statusCode)}

addEventListener("fetch", async event => {

event.respondWith(handleRequest(event.request))

})

The resulting configuration:

Migration

Each Pages project provides a pages.dev record. It was possible to set up the site in parallel and test it with that record.

Redirecting DNS was the last step. The final DNS entry is a CNAME to the original pages.dev record.

As I had a CNAME record in place already to Amplify, the wizard correctly identified this and offered to replace it.

Caching

I was curious how an edge deployment model would affecting caching. The product documentation here explains what to expect.

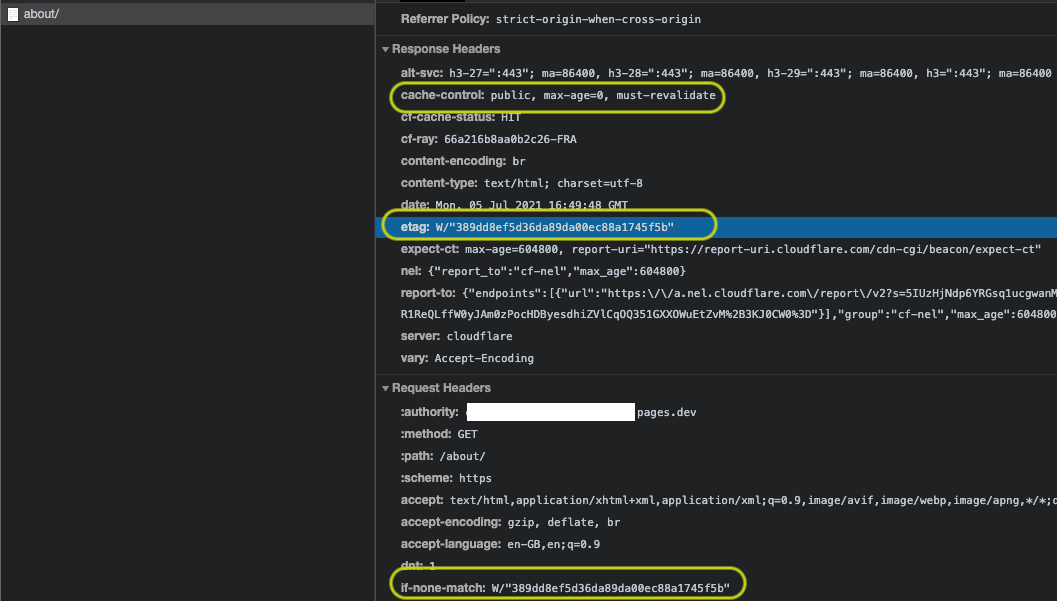

As much as possible, Pages sets ETag and If-None-Match headers to allow clients to also cache content in their browsers.

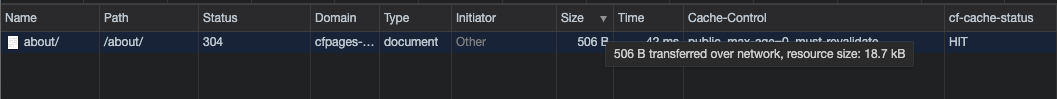

However, I was initially surprised to see network transfer for pages I had recently visited. Each request appeared to be approximately 500 bytes. What surprised me was the defaults of max-age=0,must-revalidate causing the behaviour. For example:

We can see the validation attempt in the request and response header confirmation in if-none-match and etag to use the browser cached resource:

Mozilla document this behavior. I have elected for now to add a max-age value to the content via a Page rule.

These defaults may suit a front-end application better to ensure clients use the latest assets with minimal overhead.

Troubleshooting

There is no doubt migrating to a new platform brings the opportunity to introduce mistakes or differences in function.

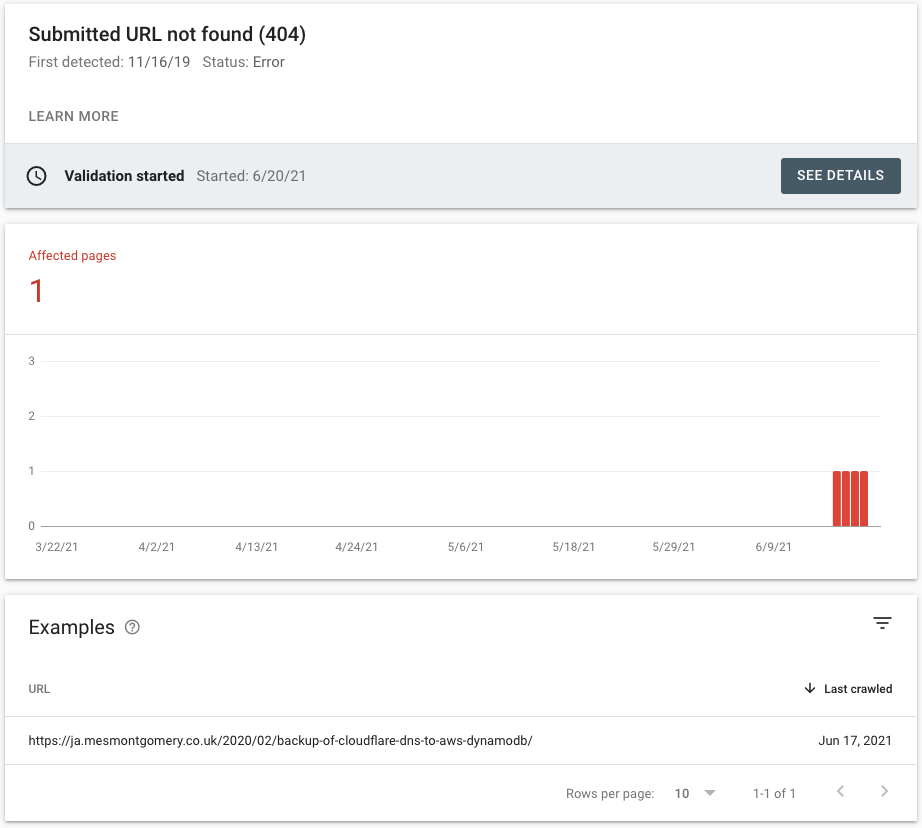

One such occasion arose when Google Search reported to me that a previously working page now returned a coverage issue: Submitted URL not found (404).

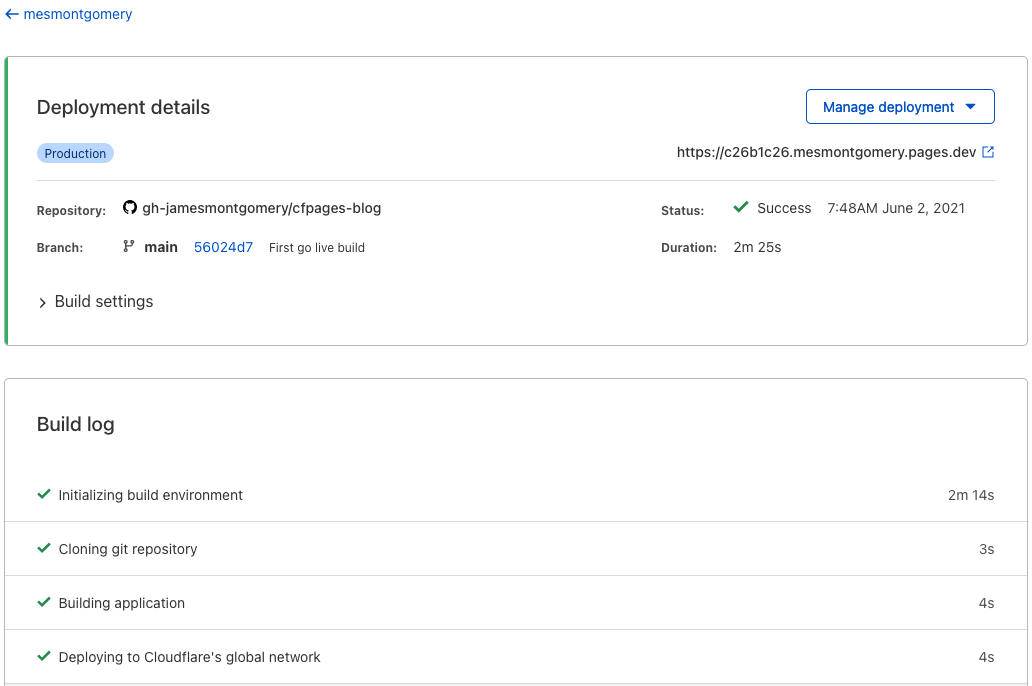

The last deployment indicated no issues:

Next, I checked the source repository. To my surprise, the file was not present on Github but present on my local machine.

Suspecting the file was ignored by Git, I check if it is included via git status --ignore.

Ignored files:

(use "git add -f <file>..." to include in what will be committed)

.DS_Store

public/2020/.DS_Store

public/2020/02/

The gitignore file wasn’t what I had used in my last repository. On this occasion, using GitHub’s new repository workflow, I recall selecting a provided option for Visual Studio presets. As I used VS Code, I elected to give it a try.

You can review the entire template here.

Correcting the file with my requirements removed them from being ignored.

Why was it ignored? To determine we use git check-ignore -v **/*.

Output below:

git check-ignore -v **/*

.gitignore:248:Backup*/ public/2020/02 copy 2/Backup-of-cloudflare-dns-to-aws-dynamodb

.gitignore:248:Backup*/ public/2020/02 copy 2/Backup-of-cloudflare-dns-to-aws-dynamodb/index.html

.gitignore:248:Backup*/ public/2020/02 copy/backup-of-cloudflare-dns-to-aws-dynamodb

.gitignore:248:Backup*/ public/2020/02 copy/backup-of-cloudflare-dns-to-aws-dynamodb/index.html

Thanks to stackoverflow for the pointer.

Line 248 in that version of the file is:

Backup*/

My initial understanding was that this shouldn’t match, as the matching path was lower case. However, on examing the file system, it would appear that case sensitivity isn’t applicable.

The file system APFS would appear to be case insensitive, and Scott Hanselman have blogged on some of their challenges.

Lesson learned, check for ignored files when performing a repository migration.

Performance measurement

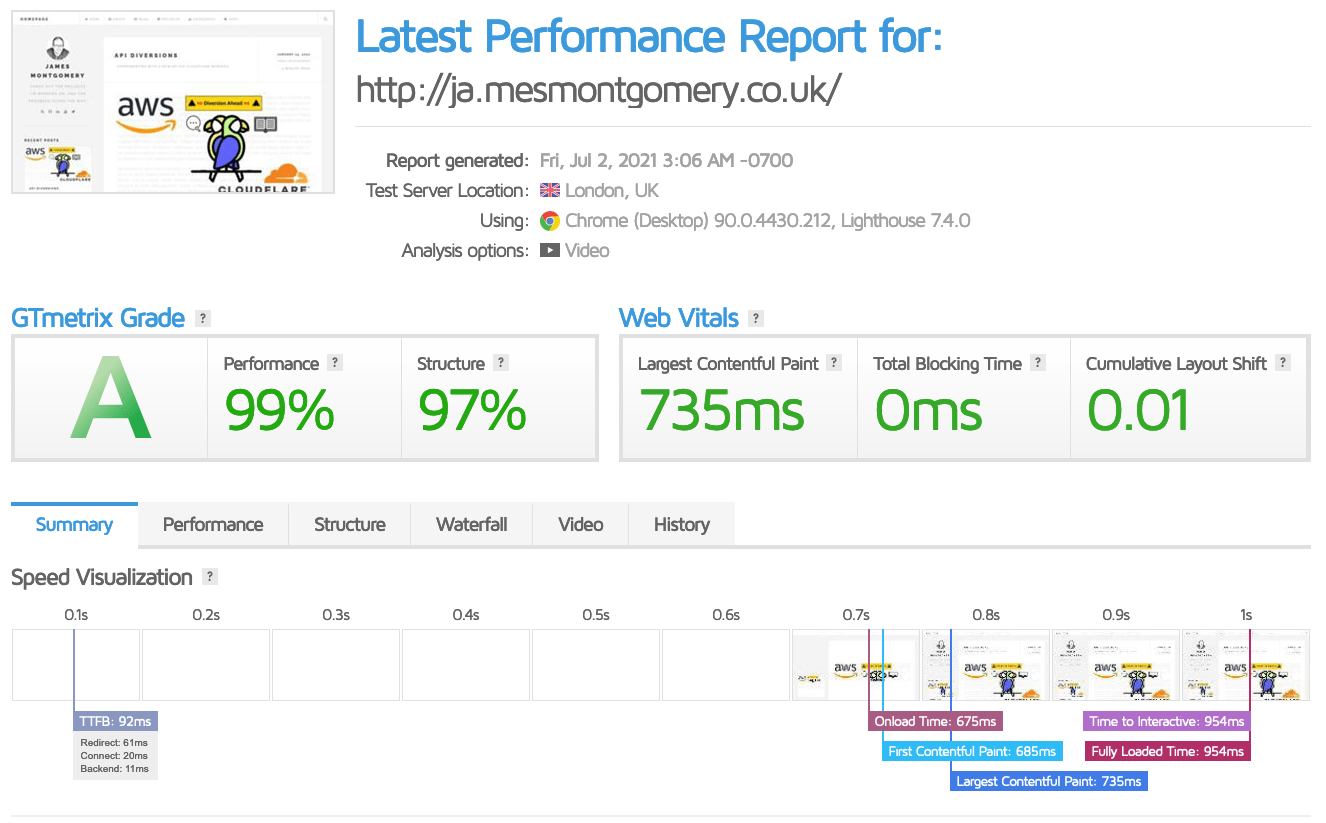

I was curious how this would perform in the end. I have previously documented performance using GTMetrix. The Cloudflare team are aware that people chase high lighthouse scores.

I include the above screenshot to show a recent score that running on Pages achieved. It is a great result as far as I’m concerned. I have also linked to a previous post, which includes some screenshots from 2019. On the face of it, this will look like a massive performance increase.

It is not the case. There have been changes to the site and methods of performance assessment. I have run a recent test against the Amplify URL with identical content - there is not much in it, either way, to be honest.

Conclusion

Let me start by saying that I’ve no issue with Amplify. In some ways, I consider it superior in features. As always, which platform you want to use depends highly on your needs and existing ecosystem.

For anyone starting in this space, especially if it is a simple Hugo blog, Cloudflare Pages is recommended.

Related posts

- This site is a project

- Migrating site to AWS

- Tighter privacy settings

- Duel stack life - exchanging momentum for speed

- Google Firebase with a side of AWS Amplify

Acknowledgements

- Thanks to Cloudflare, GitHub and GTMetrix for the capabilities in their free plans.

- Thanks to the team at Excalidraw. I used their excellent whiteboard tool to put the diagrams above together.

- Thanks to OpenMoji for the dove. I have modified the colours on the dove.

Share this post